Towards Continual and Fine-Grained Learning for Robot Perception

- 15 views

Wednesday, February 28, 2018 - 10:15 am

Innovation Center, Room 2277

COLLOQUIUM

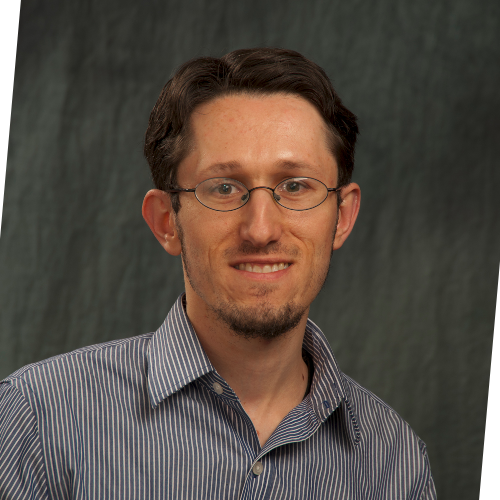

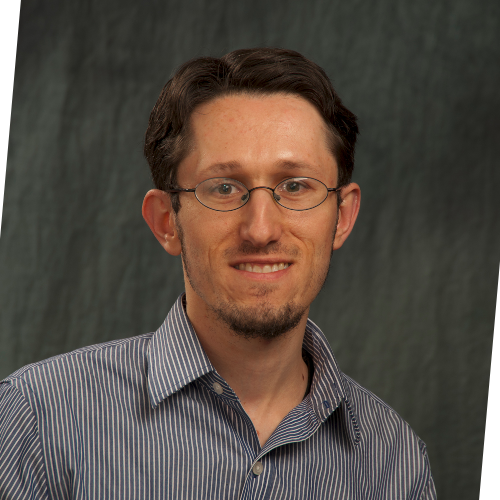

Zsolt Kira

Abstract

A large number of robot perception tasks have been revolutionized by machine learning and deep neural networks in particular. However, current learning methods are limited in several ways that hinder their large-scale use for critical robotics applications: They are often focused on individual sensor modalities, do not attempt to understand semantic information in a fine-grained temporal manner, and are beholden to strong assumptions about the data (e.g. that the data distribution is the same when deployed in the real world as when trained). In this talk, I will describe work on novel deep learning architectures for moving beyond current methods to develop a richer multi-modal and fine-grained scene understanding from raw sensor data. I will also discuss methods we have developed that can use transfer learning to deal with changes in the environment or the existence of entirely new, unknown categories in the data (e.g. unknown object types). I will focus especially on this latter work, where we use neural networks to learn how to compare objects and transfer such learning to new domains using one of the first deep-learning based clustering algorithms, which we developed. I will show examples of real-world robotic systems using these methods, and conclude by discussing future directions in this area, towards making robots able to continually learn and adapt to new situations as they arise.

Dr. Zsolt Kira received his B.S. in ECE at the University of Miami in 2002 and M.S. and Ph.D. in Computer Science from the Georgia Institute of Technology in 2010. He is currently a Senior Research Scientist and Branch Chief of the Machine Learning and Analytics group at the Georgia Tech Research Institute (GTRI). He is also an Adjunct at the School of Interactive Computing and Associate Director of Georgia Tech’s Machine Learning Center (ML@GT). He conducts research in the areas of machine learning for sensor processing and robot perception, with emphasis on feature learning for multi-modal object detection, video analysis, scene characterization, and transfer learning. He has over 25 publications in these areas, several best paper/student paper and other awards, and has been invited to speak at related workshops in both academia and government venues.

Date: Feb. 28, 2018

Time: 10:15-11:15 am

Place: Innovation Center, Room 2277

Zsolt Kira

Abstract

A large number of robot perception tasks have been revolutionized by machine learning and deep neural networks in particular. However, current learning methods are limited in several ways that hinder their large-scale use for critical robotics applications: They are often focused on individual sensor modalities, do not attempt to understand semantic information in a fine-grained temporal manner, and are beholden to strong assumptions about the data (e.g. that the data distribution is the same when deployed in the real world as when trained). In this talk, I will describe work on novel deep learning architectures for moving beyond current methods to develop a richer multi-modal and fine-grained scene understanding from raw sensor data. I will also discuss methods we have developed that can use transfer learning to deal with changes in the environment or the existence of entirely new, unknown categories in the data (e.g. unknown object types). I will focus especially on this latter work, where we use neural networks to learn how to compare objects and transfer such learning to new domains using one of the first deep-learning based clustering algorithms, which we developed. I will show examples of real-world robotic systems using these methods, and conclude by discussing future directions in this area, towards making robots able to continually learn and adapt to new situations as they arise.

Dr. Zsolt Kira received his B.S. in ECE at the University of Miami in 2002 and M.S. and Ph.D. in Computer Science from the Georgia Institute of Technology in 2010. He is currently a Senior Research Scientist and Branch Chief of the Machine Learning and Analytics group at the Georgia Tech Research Institute (GTRI). He is also an Adjunct at the School of Interactive Computing and Associate Director of Georgia Tech’s Machine Learning Center (ML@GT). He conducts research in the areas of machine learning for sensor processing and robot perception, with emphasis on feature learning for multi-modal object detection, video analysis, scene characterization, and transfer learning. He has over 25 publications in these areas, several best paper/student paper and other awards, and has been invited to speak at related workshops in both academia and government venues.

Date: Feb. 28, 2018

Time: 10:15-11:15 am

Place: Innovation Center, Room 2277

Zsolt Kira

Abstract

A large number of robot perception tasks have been revolutionized by machine learning and deep neural networks in particular. However, current learning methods are limited in several ways that hinder their large-scale use for critical robotics applications: They are often focused on individual sensor modalities, do not attempt to understand semantic information in a fine-grained temporal manner, and are beholden to strong assumptions about the data (e.g. that the data distribution is the same when deployed in the real world as when trained). In this talk, I will describe work on novel deep learning architectures for moving beyond current methods to develop a richer multi-modal and fine-grained scene understanding from raw sensor data. I will also discuss methods we have developed that can use transfer learning to deal with changes in the environment or the existence of entirely new, unknown categories in the data (e.g. unknown object types). I will focus especially on this latter work, where we use neural networks to learn how to compare objects and transfer such learning to new domains using one of the first deep-learning based clustering algorithms, which we developed. I will show examples of real-world robotic systems using these methods, and conclude by discussing future directions in this area, towards making robots able to continually learn and adapt to new situations as they arise.

Dr. Zsolt Kira received his B.S. in ECE at the University of Miami in 2002 and M.S. and Ph.D. in Computer Science from the Georgia Institute of Technology in 2010. He is currently a Senior Research Scientist and Branch Chief of the Machine Learning and Analytics group at the Georgia Tech Research Institute (GTRI). He is also an Adjunct at the School of Interactive Computing and Associate Director of Georgia Tech’s Machine Learning Center (ML@GT). He conducts research in the areas of machine learning for sensor processing and robot perception, with emphasis on feature learning for multi-modal object detection, video analysis, scene characterization, and transfer learning. He has over 25 publications in these areas, several best paper/student paper and other awards, and has been invited to speak at related workshops in both academia and government venues.

Date: Feb. 28, 2018

Time: 10:15-11:15 am

Place: Innovation Center, Room 2277

Zsolt Kira

Abstract

A large number of robot perception tasks have been revolutionized by machine learning and deep neural networks in particular. However, current learning methods are limited in several ways that hinder their large-scale use for critical robotics applications: They are often focused on individual sensor modalities, do not attempt to understand semantic information in a fine-grained temporal manner, and are beholden to strong assumptions about the data (e.g. that the data distribution is the same when deployed in the real world as when trained). In this talk, I will describe work on novel deep learning architectures for moving beyond current methods to develop a richer multi-modal and fine-grained scene understanding from raw sensor data. I will also discuss methods we have developed that can use transfer learning to deal with changes in the environment or the existence of entirely new, unknown categories in the data (e.g. unknown object types). I will focus especially on this latter work, where we use neural networks to learn how to compare objects and transfer such learning to new domains using one of the first deep-learning based clustering algorithms, which we developed. I will show examples of real-world robotic systems using these methods, and conclude by discussing future directions in this area, towards making robots able to continually learn and adapt to new situations as they arise.

Dr. Zsolt Kira received his B.S. in ECE at the University of Miami in 2002 and M.S. and Ph.D. in Computer Science from the Georgia Institute of Technology in 2010. He is currently a Senior Research Scientist and Branch Chief of the Machine Learning and Analytics group at the Georgia Tech Research Institute (GTRI). He is also an Adjunct at the School of Interactive Computing and Associate Director of Georgia Tech’s Machine Learning Center (ML@GT). He conducts research in the areas of machine learning for sensor processing and robot perception, with emphasis on feature learning for multi-modal object detection, video analysis, scene characterization, and transfer learning. He has over 25 publications in these areas, several best paper/student paper and other awards, and has been invited to speak at related workshops in both academia and government venues.

Date: Feb. 28, 2018

Time: 10:15-11:15 am

Place: Innovation Center, Room 2277

Abstract

A wide range of modern software-intensive systems (e.g., autonomous systems, big data analytics, robotics, deep neural architectures) are built configurable. These systems offer a rich space for adaptation to different domains and tasks. Developers and users often need to reason about the performance of such systems, making tradeoffs to change specific quality attributes or detecting performance anomalies. For instance, developers of image recognition mobile apps are not only interested in learning which deep neural architectures are accurate enough to classify their images correctly, but also which architectures consume the least power on the mobile devices on which they are deployed. Recent research has focused on models built from performance measurements obtained by instrumenting the system. However, the fundamental problem is that the learning techniques for building a reliable performance model do not scale well, simply because the configuration space is exponentially large that is impossible to exhaustively explore. For example, it will take over 60 years to explore the whole configuration space of a system with 25 binary options.

In this talk, I will start motivating the configuration space explosion problem based on my previous experience with large-scale big data systems in industry. I will then present my transfer learning solution to tackle the scalability challenge: instead of taking the measurements from the real system, we learn the performance model using samples from cheap sources, such as simulators that approximate the performance of the real system, with a fair fidelity and at a low cost. Results show that despite the high cost of measurement on the real system, learning performance models can become surprisingly cheap as long as certain properties are reused across environments. In the second half of the talk, I will present empirical evidence, which lays a foundation for a theory explaining why and when transfer learning works by showing the similarities of performance behavior across environments. I will present observations of environmental changes‘ impacts (such as changes to hardware, workload, and software versions) for a selected set of configurable systems from different domains to identify the key elements that can be exploited for transfer learning. These observations demonstrate a promising path for building efficient, reliable, and dependable software systems. Finally, I will share my research vision for the next five years and outline my immediate plans to further explore the opportunities of transfer learning.

Pooyan Jamshidi is a postdoctoral researcher at Carnegie Mellon University, where he works on transfer learning for building performance models to enable dynamic adaptation of mobile robotics software as a part of BRASS, a DARPA sponsored project. Prior to his current position, he was a research associate at Imperial College London, where he worked on Bayesian optimization for automated performance tuning of big data systems. He holds a Ph.D. from Dublin City University, where he worked on self-learning Fuzzy control for auto-scaling in the cloud. He has spent 7 years in industry as a developer and a software architect. His research interests are at the intersection of software engineering, systems, and machine learning, and his focus lies predominantly in the areas of highly-configurable and self-adaptive systems (more details:

Abstract

A wide range of modern software-intensive systems (e.g., autonomous systems, big data analytics, robotics, deep neural architectures) are built configurable. These systems offer a rich space for adaptation to different domains and tasks. Developers and users often need to reason about the performance of such systems, making tradeoffs to change specific quality attributes or detecting performance anomalies. For instance, developers of image recognition mobile apps are not only interested in learning which deep neural architectures are accurate enough to classify their images correctly, but also which architectures consume the least power on the mobile devices on which they are deployed. Recent research has focused on models built from performance measurements obtained by instrumenting the system. However, the fundamental problem is that the learning techniques for building a reliable performance model do not scale well, simply because the configuration space is exponentially large that is impossible to exhaustively explore. For example, it will take over 60 years to explore the whole configuration space of a system with 25 binary options.

In this talk, I will start motivating the configuration space explosion problem based on my previous experience with large-scale big data systems in industry. I will then present my transfer learning solution to tackle the scalability challenge: instead of taking the measurements from the real system, we learn the performance model using samples from cheap sources, such as simulators that approximate the performance of the real system, with a fair fidelity and at a low cost. Results show that despite the high cost of measurement on the real system, learning performance models can become surprisingly cheap as long as certain properties are reused across environments. In the second half of the talk, I will present empirical evidence, which lays a foundation for a theory explaining why and when transfer learning works by showing the similarities of performance behavior across environments. I will present observations of environmental changes‘ impacts (such as changes to hardware, workload, and software versions) for a selected set of configurable systems from different domains to identify the key elements that can be exploited for transfer learning. These observations demonstrate a promising path for building efficient, reliable, and dependable software systems. Finally, I will share my research vision for the next five years and outline my immediate plans to further explore the opportunities of transfer learning.

Pooyan Jamshidi is a postdoctoral researcher at Carnegie Mellon University, where he works on transfer learning for building performance models to enable dynamic adaptation of mobile robotics software as a part of BRASS, a DARPA sponsored project. Prior to his current position, he was a research associate at Imperial College London, where he worked on Bayesian optimization for automated performance tuning of big data systems. He holds a Ph.D. from Dublin City University, where he worked on self-learning Fuzzy control for auto-scaling in the cloud. He has spent 7 years in industry as a developer and a software architect. His research interests are at the intersection of software engineering, systems, and machine learning, and his focus lies predominantly in the areas of highly-configurable and self-adaptive systems (more details:  Abstract:

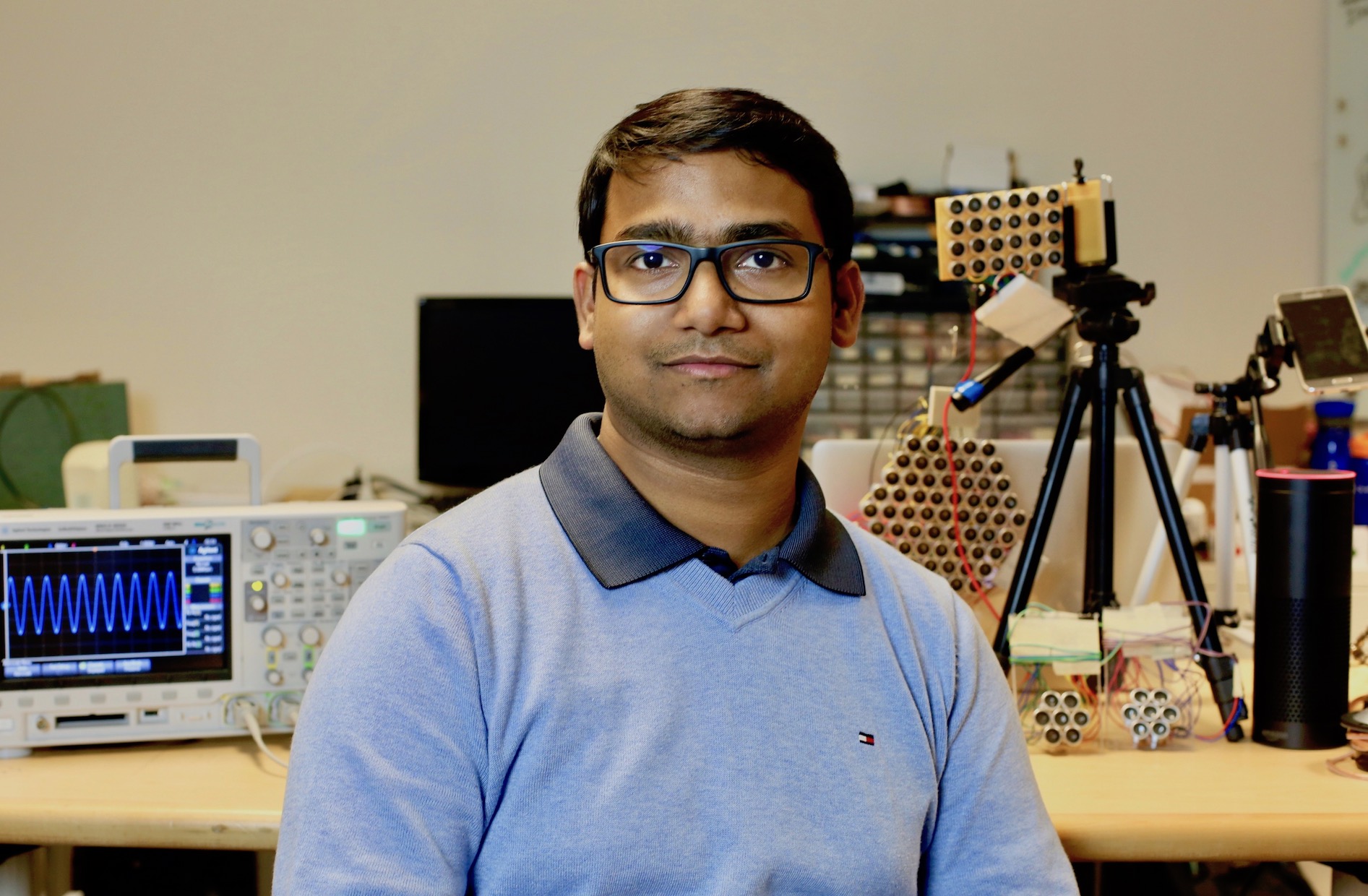

The recent proliferation of acoustic devices, ranging from voice assistants to wearable health monitors, is leading to a sensing ecosystem around us -- referred to as the Internet of Acoustic Things or IoAT. My research focuses on developing hardware-software building blocks that enable new capabilities for this emerging future. In this talk, I will sample some of my projects. For instance, (1) I will demonstrate carefully designed sounds that are completely inaudible to humans but recordable by all microphones. (2) I will discuss our work with physical vibrations from mobile devices, and how they conduct through finger bones to enable new modalities of short range, human-centric communication. (3) Finally, I will draw attention to various acoustic leakages and threats that arrive with sensor-rich environments. I will conclude this talk with a glimpse of my ongoing and future projects targeting a stronger convergence of sensing, computing, and communications in tomorrow’s IoT, cyber-physical systems, and healthcare technologies.

Bio:

Abstract:

The recent proliferation of acoustic devices, ranging from voice assistants to wearable health monitors, is leading to a sensing ecosystem around us -- referred to as the Internet of Acoustic Things or IoAT. My research focuses on developing hardware-software building blocks that enable new capabilities for this emerging future. In this talk, I will sample some of my projects. For instance, (1) I will demonstrate carefully designed sounds that are completely inaudible to humans but recordable by all microphones. (2) I will discuss our work with physical vibrations from mobile devices, and how they conduct through finger bones to enable new modalities of short range, human-centric communication. (3) Finally, I will draw attention to various acoustic leakages and threats that arrive with sensor-rich environments. I will conclude this talk with a glimpse of my ongoing and future projects targeting a stronger convergence of sensing, computing, and communications in tomorrow’s IoT, cyber-physical systems, and healthcare technologies.

Bio: