- 25 views

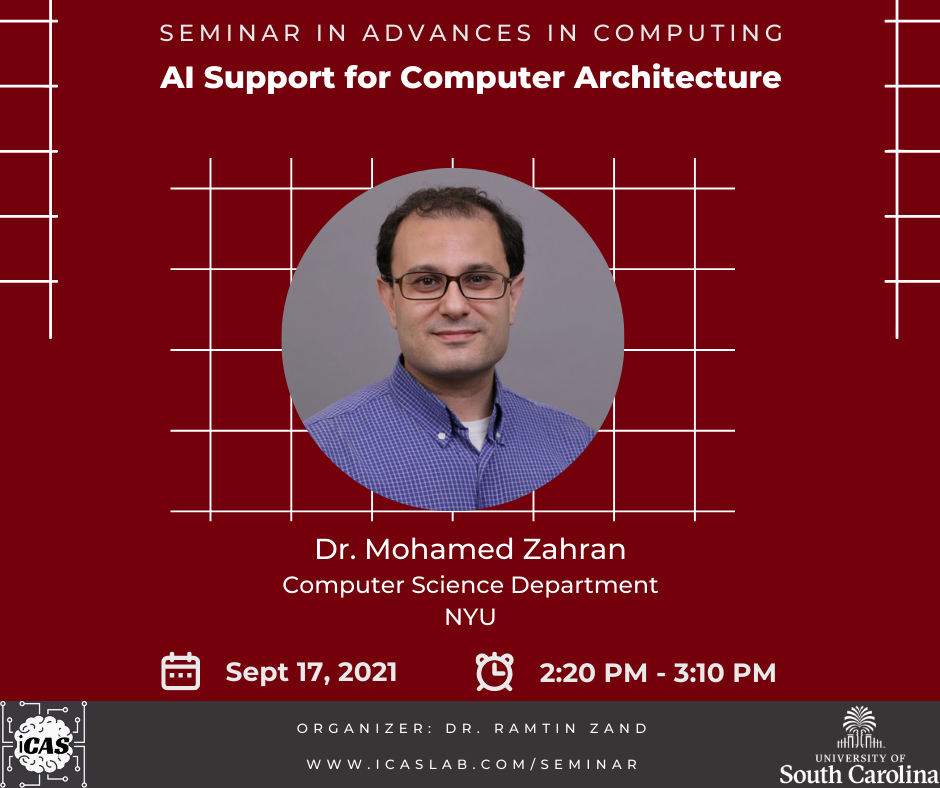

This week at the Seminar in Advances in Computing, we have an exciting talk by Dr. Mohammed Zahran from New York University. The talk will be focused on machine learning and adaptive computer architectures. Please use the below link if you are interested in joining the meeting virtually. Also, please share it with your students if you believe attending the talk will benefit them.

Meeting Location:

Storey Innovation Center 1400

Meeting Link (click me)

BIO: Mohamed Zahran is a professor with the Computer Science Department at Courant Institute of NYU. He received his Ph.D. in Electrical and Computer Engineering from University of Maryland at College Park. His research interests span several aspects of computer architecture, such as architecture of heterogeneous systems, hardware/software interaction, and biologically-inspired architectures. He served in many review panels in organizations such as DoE and NSF, served as PC member in many premiere conferences and as reviewer for many journals. He will serve as the general co-chair of the 49th International Symposium of Computer Architecture (ISCA) to be held in NYC in 2022. Zahran is an ACM Distinguished Speaker, a senior member of IEEE, a senior member of ACM, and a member of Sigma Xi scientific honor society.

TALK ABSTRACT: For the past decade people have been predicting the death of Moore's law, which is now as likely as ever both for technological reasons and for economical reasons. We have already kissed Dennard's scaling goodbye since around 2004. Given that, we are left with two options to get performance: parallel processing and specialized domain specific architectures (e.g. GPUs, TPUs, FPGAs, ...). But programs have different characteristics that change during the lifetime of a program's execution and across different programs. Both general purpose multicore and specialized architectures are designed with an average case in mind, whether this average case is for a general purpose parallel program or a specialized program like GPU-friendly ones, and this is anything but efficient in an era where power efficiency is as important as performance. Building a machine that can adapt to program requirements is necessary but not sufficient. In this talk we discuss the research path toward building a machine that learns from executing different programs and adapts for best performance when executing unseen programs. We discuss the main challenges involved: a fully adaptable machine is expensive in terms of hardware requirement and transferring learning from a program execution to another program execution is challenging.